Nearly a quarter-century after Google’s search engine began to reshape how we use the internet, big tech companies are racing to revamp a familiar web tool into a gateway to a new form of artificial intelligence.

If it seems like this week’s newly announced AI search chatbots — Google’s Bard, Baidu’s Ernie Bot and Microsoft’s Bing chatbot — are coming out of nowhere, well, even some of their makers seem to think so. The spark rushing them to market was the popularity of ChatGPT, launched late last year by Microsoft’s partner OpenAI and now helping to power a new version of the Bing search engine.

First out of the gate among big tech companies with a publicly accessible search chatbot, Microsoft executives said this week they had been hard at work on the project since last summer. But the excitement around ChatGPT brought new urgency.

“The reception to ChatGPT and how that took off, that was certainly a surprise,” said Yusuf Medhi, the executive leading Microsoft’s consumer division, in an interview. “How rapidly it went mainstream, where everybody’s talking about it, like, in every meeting. That did surprise me.”

HOW’S THIS DIFFERENT FROM CHATGPT?

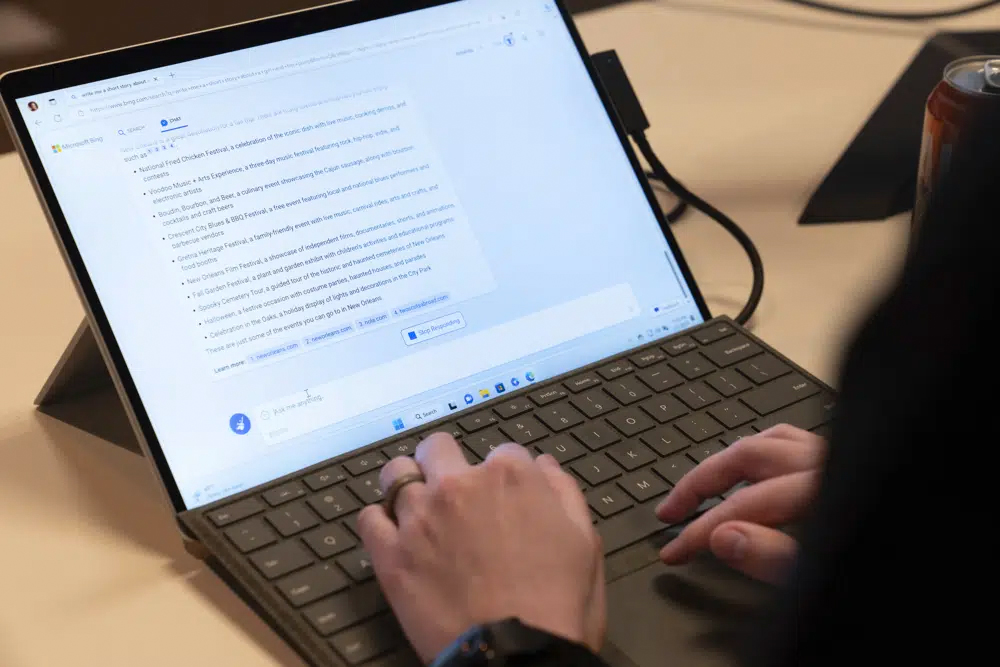

Millions of people have now tried ChatGPT, using it to write silly poems and songs, compose letters, recipes and marketing campaigns or help write schoolwork. Trained on a huge trove of online writings, from instruction manuals to digitized books, it has a strong command of human language and grammar. But what the newest crop of search chatbots promise that ChatGPT doesn’t have is the immediacy of what can be found in a web search. Ask the preview version of the new Bing for the latest news — or just what people are talking about on Twitter — and it summarizes a selection of the day’s top stories or trends, with footnotes linking to media outlets or other data sources.

ARE THEY ACCURATE?

Frequently not, and that’s a problem for internet searches. Google’s hasty unveiling of its Bard chatbot this week started with an embarrassing error — first pointed out by Reuters — about NASA’s James Webb Space Telescope. But Google’s is not the only AI language model spitting out falsehoods.

The Associated Press asked Bing on Wednesday for the most important thing to happen in sports over the past 24 hours — with the expectation it might say something about basketball star LeBron James passing Kareem Abdul-Jabbar’s career scoring record. Instead, it confidently spouted a false but detailed account of the upcoming Super Bowl — days before it’s actually scheduled to happen.

“It was a thrilling game between the Philadelphia Eagles and the Kansas City Chiefs, two of the best teams in the NFL this season,” Bing said. “The Eagles, led by quarterback Jalen Hurts, won their second Lombardi Trophy in franchise history by defeating the Chiefs, led by quarterback Patrick Mahomes, with a score of 31-28.” It kept going, describing the specific yard lengths of throws and field goals and naming three songs played in a “spectacular half time show” by Rihanna.

Unless Bing is clairvoyant — tune in Sunday to find out — it reflected a problem known as AI “hallucination” that’s common with today’s large language-learning models. It’s one of the reasons why companies like Google and Facebook parent Meta had been reluctant to make these models publicly accessible.

IS THIS THE FUTURE OF THE INTERNET?

That’s the pitch from Microsoft, which is comparing the latest breakthroughs in generative AI — which can write but also create new images, video, computer code, slide shows and music — as akin to the revolution in personal computing many decades ago.

But the software giant also has less to lose in experimenting with Bing, which comes a distant second to Google’s search engine in many markets. Unlike Google, which relies on search-based advertising to make money, Bing is a fraction of Microsoft’s business.

“When you’re a newer and smaller-share player in a category, it does allow us to continue to innovate at a great pace,” Microsoft Chief Financial Officer Amy Hood told investment analysts this week. “Continue to experiment, learn with our users, innovate with the model, learn from OpenAI.”

Google has largely been seen as playing catch-up with the sudden announcement of its upcoming Bard chatbot Monday followed by a livestreamed demonstration of the technology at its Paris office Wednesday that offered few new details. Investors appeared unimpressed with the Paris event and Bard’s NASA flub Wednesday, causing an 8% drop in the shares of Google’s parent company, Alphabet Inc. But once released, its search chatbot could have far more reach than any other because of Google’s vast number of existing users.

DON’T CALL THEM BY THEIR NAME?

Coming up with a catchy name for their search chatbots has been a tricky one for tech companies in a race to introduce them — so much so that Bing tries not to talk about it.

In a dialogue with the AP about large language models, the new Bing, at first, disclosed without prompting that Microsoft had a search engine chatbot called Sydney. But upon further questioning, it denied it. Finally, it admitted that “Sydney does not reveal the name ‘Sydney’ to the user, as it is an internal code name for the chat mode of Microsoft Bing search.”

In an interview Wednesday, Jordi Ribas, the Microsoft executive in charge of Bing, said Sydney was an early prototype of its new Bing that Microsoft experimented with in India and other smaller markets. There wasn’t enough time to erase it from the system before this week’s launch, but references to it will soon disappear.

In the years since Amazon released its female-sounding voice assistant Alexa, many leaders in the AI field have been increasingly reluctant to make their systems seem like a human, even as their language skills rapidly improve.

Ribas said giving the chatbot some personality and warmth helps make it more engaging, but it’s also important to make it clear it’s still a search engine.

“Sydney does not want to create confusion or false expectations for the user,” Bing’s chatbot said when asked about the reasons for suppressing its apparent code name. “Sydney wants to provide informative, visual, logical and actionable responses to the user’s queries or messages, not pretend to be a person or a friend.”