In April 2023, Alwaleed Philanthropies (AP) conducted a social experiment on the Metaverse to determine how individuals would respond to verbal abuse in a virtual space.

Although both the perpetrators and victims were artificial simulations, the experiment exposed some of the realities around combatting ethno-racial prejudice in the Metaverse.

The prevalence of group apathy or normalising attitudes were not as concerning as the challenges faced by users who attempted to report abusive behaviour. Rather than a standardised mechanism for users to escalate anti-social behaviour, communities in the Metaverse are generally expected to self-organise and safeguard users on their own.

As the world looks to converge futures, livelihoods, and a new outlook on human connectivity with immersive technology – this experiment highlights the need for a near trillion-dollar industry to invest in developing robust safeguarding mechanisms, to create an online ecosystem that is safe for everyone.

The experiment

Each of the victims’ avatars were created to appear of different ethnic and religious backgrounds, which was the basis of the verbal abuse and harassment directed at them from the perpetrators’ avatars. All the instances of abuse took place in congested parts of popular Metaverse platforms: Decentraland, Sandbox and Spatial.

The experiment sought to understand whether users were willing to intervene, and how long it would take for them to do so. However, 70% of bystanders showed no response at all; and if an intervention did take place, it took an average of 2 minutes and 2 seconds.

Alwaleed Philanthropies identified a notable discrepancy when it came to the type of assault, particularly around race or religious orientation. In incidents where there the abuse was fuelled by religious intolerance, at least one user responded in 50% of the experiments.

Meanwhile, in the three experiments where an individual was subject to racial abuse and discrimination, no users intervened – despite taking place in typically crowded areas on the platform.

Reflections

With only 2.8% of users responding to instances of discrimination, this experiment highlights the need to consider ways to foster a sense of accountability among both the users and architects in digital spaces such as the Metaverse.

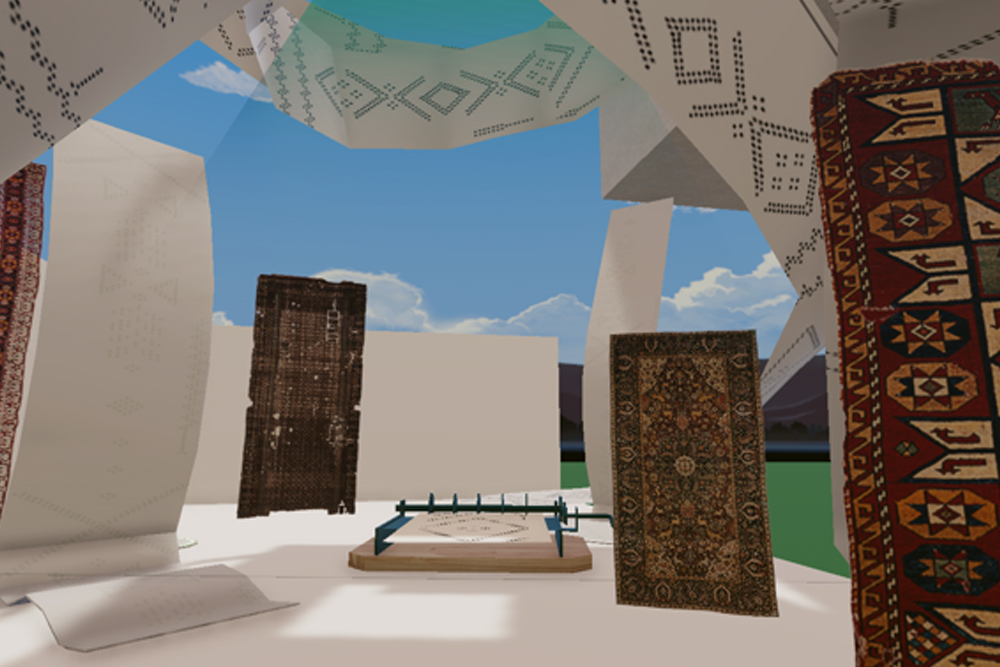

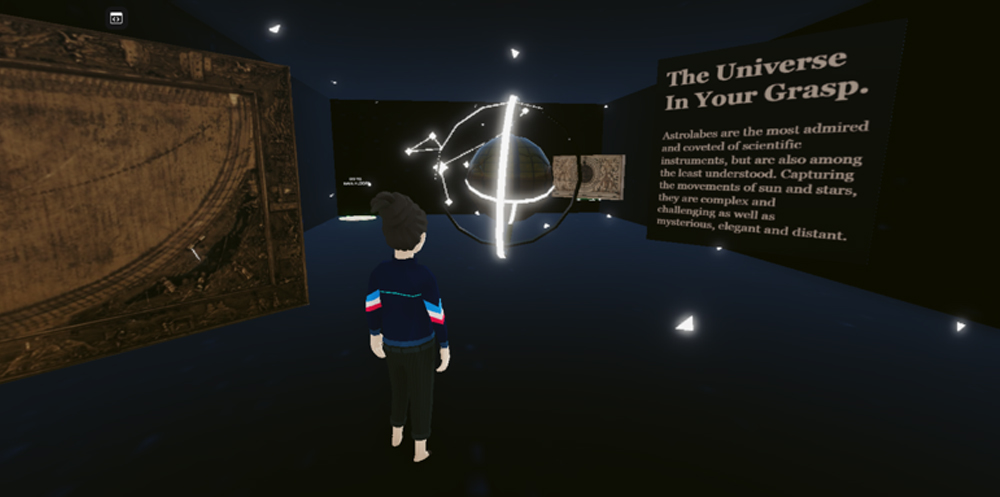

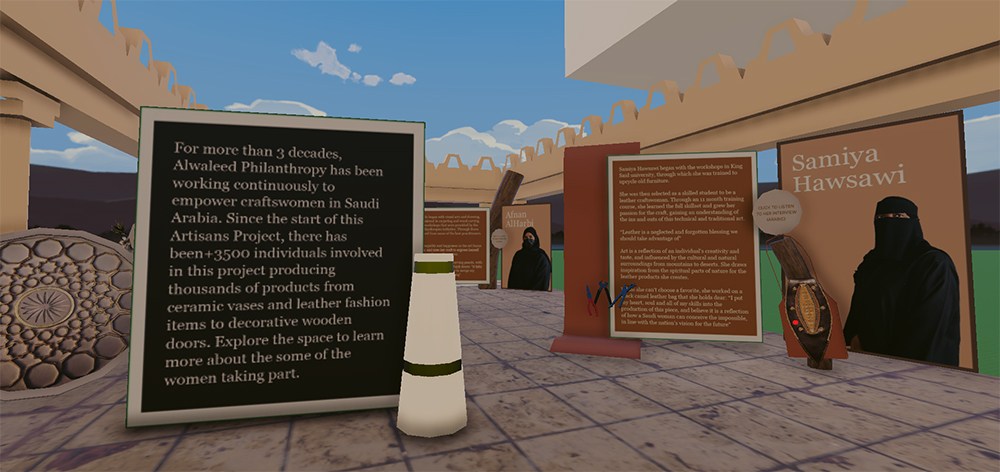

Organisations such as Alwaleed Philanthropies remain committed to using their platform to combat social misconceptions and promote meaningful inclusion in the Metaverse. In 2022, it launched a virtual centre on the Metaverse that uses cultural dialogue, artistic exhibitions, and historical artefacts as a tool to bridge cultures.

At a time where social norms have evolved alongside a shared vision for tolerance, justice, and cohesion, it is critical that our virtual spaces are held to the same standards. The world of immersive technology is an extension of the today’s world, including the social conventions and regulations that are in place to protect people.

We cannot allow the Metaverse to become a landscape where the inclusion and freedom we fight for is not protected. The outcomes of this experiment affirm the need for more productive and responsible use of immersive platforms such as the Metaverse.

Although Alwaleed Philanthropies’ experiment only provides a brief snapshot of the issues surrounding bullying in the Metaverse, it is clear that more should be done to safeguard users from verbal assault, bullying, and intolerance in immersive spaces.